Adventures in writing a custom game engine

Introduction

Hello all! Between stress from University and the the pressure of releasing a Steam game sometimes you just gotta take some time off and do something relaxing while also investing in your future. Like writing a custom game engine from the ground(ish) up. Today, and hopefully in the future (if I don’t give up), I’m going to ramble about my experiences in planning out and writing a custom game engine with the hope that someone might find this interesting, or let me know if I do or say something really stupid.

But why?

Simply put: convenience. I need an engine that:

- Handles remote collaboration through Git or other version control well

- Is easy to port to multiple platforms (consoles included)

- Is reasonably priced (cheap or free)

- Supports fancy GPU stuff like shaders

- Uses a language I like

- Plays nice with FMOD, something my sound designer needs for future projects

- Handles 2d pixel art well.

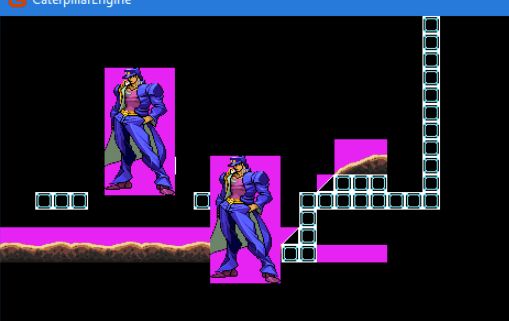

The more games I work now nowadays the larger the teams seem to be. Currently PSYCRON is a two person effort with about a bunch of other amigos helping out with feedback, testing and promotion. Gamemaker Studio 2 (the engine I’m using) works great when I’m the only one making changes to the codebase but it quickly becomes a problem if someone who isn’t me wants to try out their new art, audio, or dialogue. To get or test their assets in the game they have to:

- Send me all their suggestions/changes/new files and wait for me to load them in/finish what I’m doing THEN load them in

- Buy/download/get comfortable with GameMaker and load them in themselves, later merging changes in through Git.

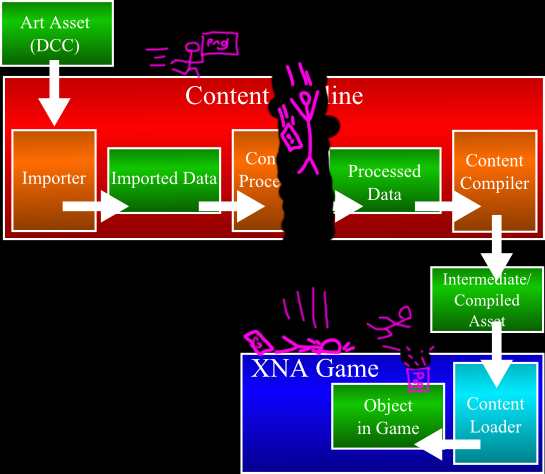

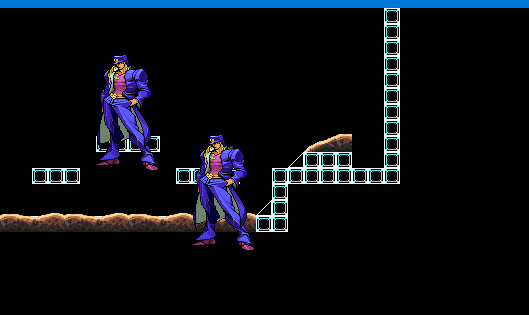

Here’s option 1, my current pipeline for jam games and the like, illustrated.

My nightmares (and life sometimes), visualized.

This isn’t feasible for longer and larger projects, which led me to set my sights elsewhere. But which engine to choose?

100 different game engines on the wall, 100 different game engines-

GameMaker Studio 2

Game Maker (or GameMaker now), my first engine and foray into “reeeal programming”.

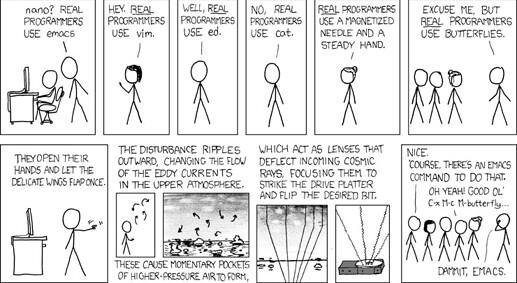

This felt appropriate for a post about reinventing the wheel, but I couldn’t find anywhere else to put it.

I love this engine, it’s like game development comfort food for me. It has some weirdness to it (ztesting is enabled for surfaces by default, in a 2d engine, why???) but it’s great for prototyping and getting something up and running quickly. Unfortunately when you start to look at larger projects you run into issues. With a lot of different objects and scripts, the IDE starts to slow down, especially on laptops or lower end computers. While all the tools in GameMaker are pretty decent, they tend to chug if you’ve got too many open at once.

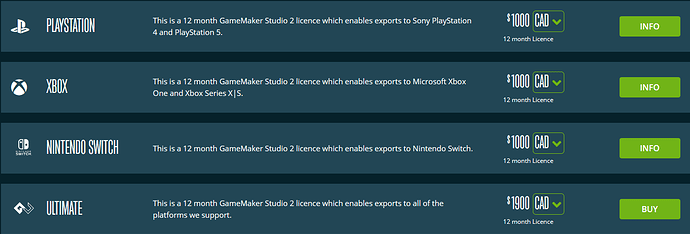

GML (Gamemaker’s proprietary scripting language) has come a long way since 2012 when I first started, introducing some more OOP stuff with constructors and sort of inheritance within them. It’s very forgiving with semicolons being more of a suggestion then a hard rule, but has no static typing, a simple way to have default values for function parameters or function overloading (someone from YoYo actually personally shot this idea down when I suggested it in a beta). After three semesters of C++, these are features I’m really starting to miss. Console porting is also preeeeety expensive.

Part of my wallet died inside looking at this. Note, that’s $1000 for 12 MONTHS.

The 2.3.1 update made it play significantly nicer with Git, but it’s still not perfect. There’s also no native FMOD bindings and while I can probably write a .dll for Windows to handle that (I actually got about 90% of the way there, loading banks, playing sounds and everything) porting that to every other platform I’d want to export to would be a nightmare.

For it’s pricing, scalability issues and weird audio engine which I didn’t get into here, GMS is out of the question, at least for larger projects.

Unity

Unity… is alright. If I was interested in doing 3d stuff, it’d probably be my engine of choice as it’s really great at doing that from what I’ve seen. One of my main issues with it being it feels more designed with 3d in mind with 2d being a later edition and pixel art 2d being even less of a priority. I got to play around with it in school in a game development class (the majority of which was spent making PSYCRON, thanks Mr. Wu!) and using it like everything the kitchen sink is included.

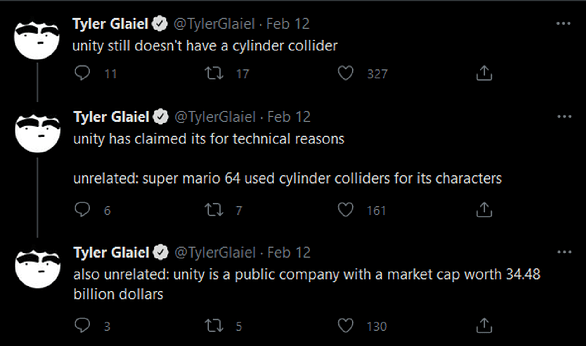

I feel like it shouldn’t take this long?

There’s probably ways to poke Unity with a sharp stick and force it to do what I want but that seems like a lot more trouble than it’s worth.

Funny thing is that Unity says it’s pill collider is a cylinder collider with two half circle colliders for the end caps, so the mythical cylinder exists somewhere.

It’s also “rental software”, with a yearly cost to use it’s pro version which I’m not really a fan of. As far as yearly software goes it’s prices are reasonable -ish ($400 a year, per person) but I prefer to just pay once and own my software outright rather than Unity hooking up an IV to my wallet with the possibility of a pro licence disappearing into smoke if their servers ever die.

Unreal

Similar to Unity, it’s really good at what it does, but what Unreal does well isn’t something I’m interested in making. It’s revenue share opposed to fining me once per year is nice but it has the Unity issue of being a 3d engine with 2d feeling like an afterthought.

Godot

From what I’ve seen of it, free and open source Unity without a lot of the bloat. It’s pretty awesome! Except that console porting has to be done by an external company and it’s FMOD situation is questionable. So close!

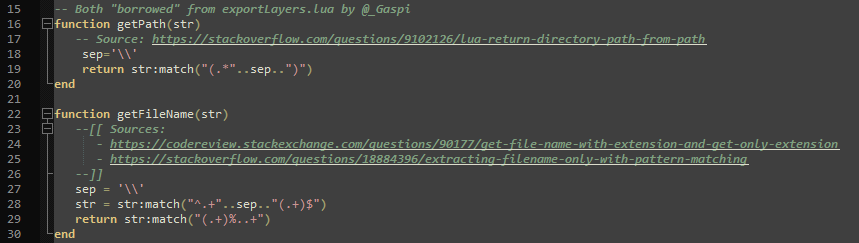

Frameworks

So, some interesting engines but none of them really fit exactly what I want to do. The fact that I’ve also got to start job hunting soon weighing heavily on my mind and wanting something that isn’t “games made in a language that’s the weird kid of JavaScript and C++” to stick on my resume and GitHub I started looking at another option: building my own engine using a framework.

SDL2

I’ve been on and off with SDL2 and if I ever really “git gud” with another graphics API I’d probably use it to handle basic keyboard and gamepad input because it can do that really well. SDL’s rendering was a bit of a shock at first coming from Gamemaker but I got comfortable pretty quickly. It also has native Switch support and has some really nice C++ bindings!

Unfortunately, it’s missing one feature which absolutely kills me: no shader support! I use shaders a lot, from hitflashes, to wave distortion, repaletting sprites (absolutely essential) and while I can probably make some simple games in SDL2, trying to expand beyond that without shaders isn’t really viable for me.

SFML

Simpler than SDL2 but covers a lot of the bases I want. It has some nice C++ bindings and supports shaders, yay! This was almost the framework I went with until I discovered that it just doesn’t support consoles. Oh well.

OpenGL

Hoooooooh boy was OpenGL tempting. Lots of tutorials, supported by literally everything imaginable, would look amazing on a resume… But it’s just too complicated for me and what I want to do. The sheer amount of work that’s involved to get a triangle on screen then getting a texture on that; trying to extend and make an entire engine with it? That’s a bit much for me. Who knows, maybe I’ll flip-flop back to it one day but I’m definitely not ready yet (props to Troid for figuring it out and using it in NME though).

PyGame

lol

It seems I’ve hit a dead end. I’m out of options.

But wait-

In the distance? Is that the ghost of Christmas Past?

An old engine long forgotten? My saviour?

It is! Monogame!

Monogame

Okay, it’s C# (ehhhhhh) not C++ BUT-

- It supports shaders

- It supports consoles

- It’s designed to work with Visual Studio so that should play well with Git right?

- Right?

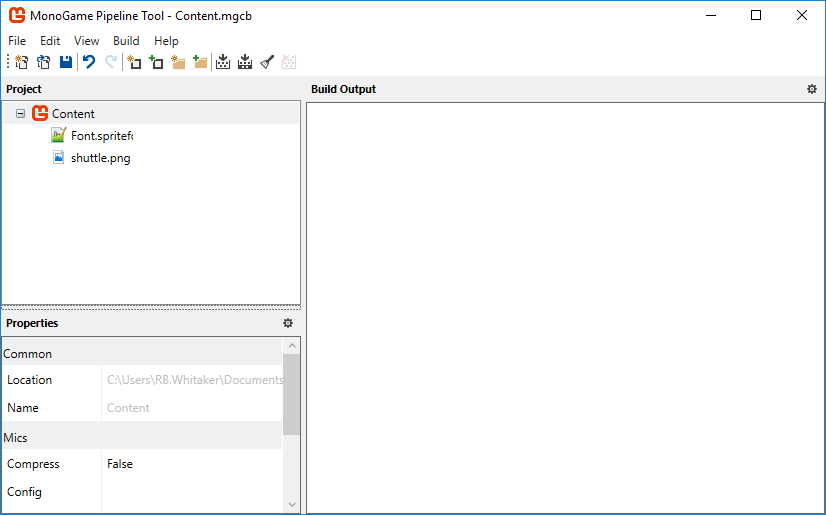

- Real neat extendable pipeline tool

- It’s free.

Monogame is in this weird perfect in-between for me where it handles just enough of the under the hood stuff(basic sprite blitting) so I’m not worrying about making triangles and quads to render to but also leaves me free to design my the systems that I actually find interesting. So, for now at least, it’s the framework I’m gonna run with.

This post has gone on long enough, so I hope whoever stuck it out or skimmed to the end found some of this interesting. Thoughts/suggestions are appreciated if anyone’s got them. Next time, I’d like to go into detail about creating a graphics pipeline: texture atlases and my misadventures with rectangle organization. Thanks for reading!